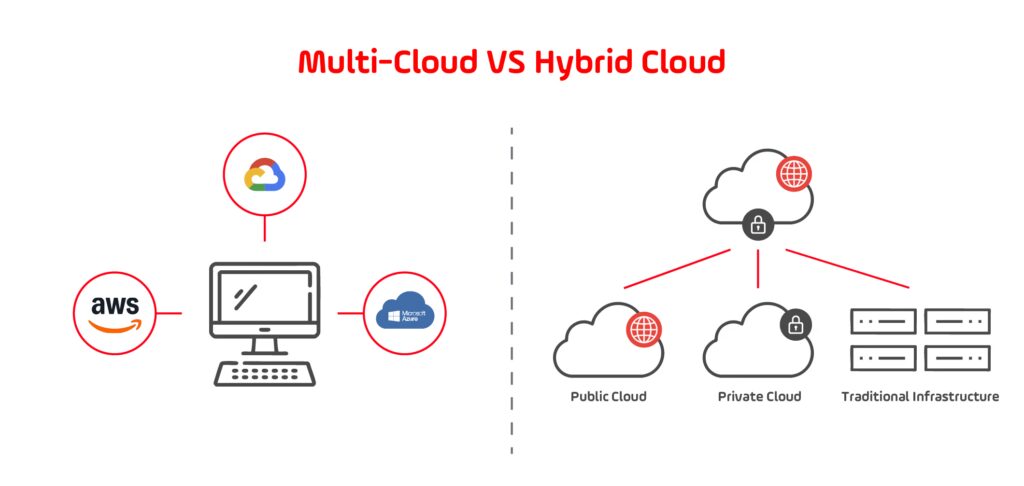

As organizations enter 2026, multi-cloud operations have reached a level of maturity that requires both architectural discipline and financial precision. The scale of AI-driven workloads, GPU-intensive training pipelines, and globally distributed data platforms expanded significantly across 2025, leading many companies to re-evaluate how they manage costs across AWS, Azure, and Google Cloud. This shift has made cloud cost optimization a top priority for engineering, architecture, and finance teams seeking predictability in environments where service pricing, consumption patterns, and performance requirements evolve rapidly. The accelerated adoption of multi-cloud ecosystems has highlighted a persistent reality: flexibility and scalability are valuable only when paired with a structured approach to cloud cost optimization.

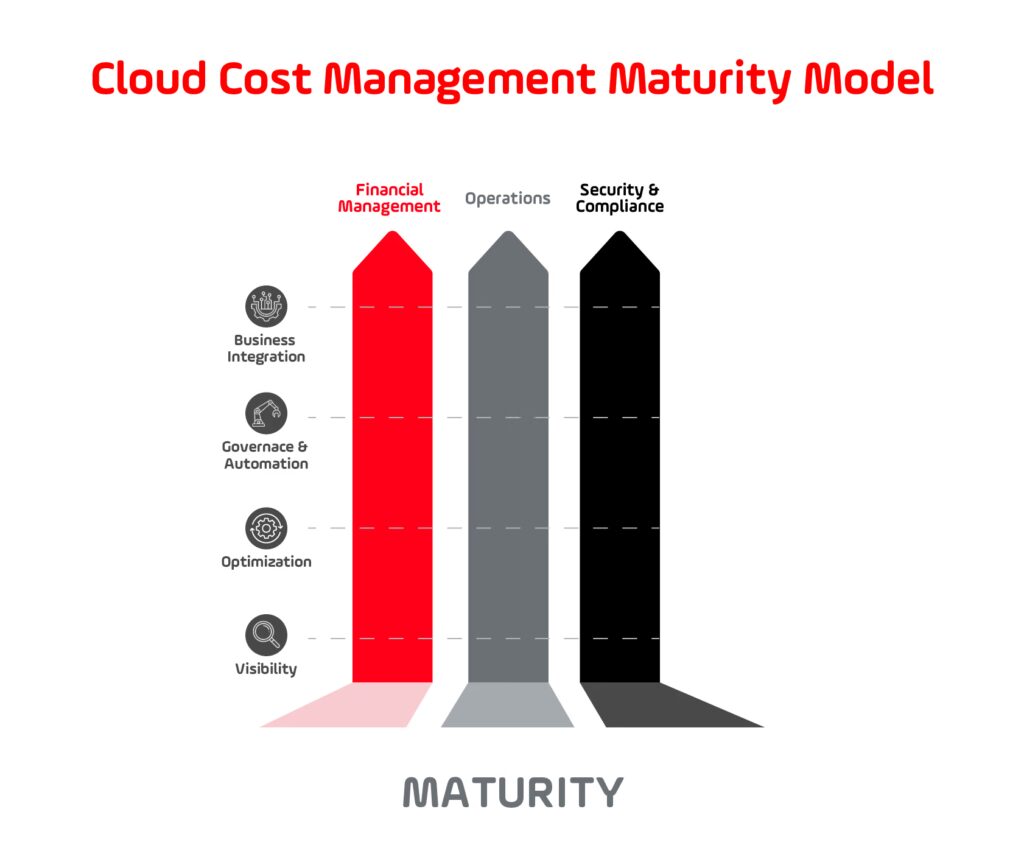

Throughout 2025, major providers introduced new usage-based pricing dimensions, upgraded cost analytics, and expanded recommendation engines that offer deeper insights into resource behavior. These advancements improved visibility but also increased the complexity of managing spend across platforms. As a result, cloud cost optimization now hinges on more than baseline monitoring or ad-hoc cleanup. It depends on operational models that blend real-time telemetry, workload benchmarking, rightsizing cycles, and governance policies that scale with the environment. Insights from industry research, including the State of FinOps Report 2025, show that organizations using structured frameworks outperform reactive approaches by a wide margin and are better positioned to manage multi-cloud growth.

Organizations that invested early in governance, automation, and financial accountability throughout 2024 and 2025 demonstrated measurable improvements in efficiency. Engineering teams leaned on automated guardrails to prevent resource sprawl, while finance teams adopted models that forecast consumption tied to product roadmaps and workload expansions. These practices made cloud cost optimization a continuous discipline rather than a quarterly review process. The most successful companies adopted a forward-looking mindset, using historical data and platform capabilities to anticipate future demands instead of responding to overspend after it occurred. This operational maturity reflects a broader industry understanding that cloud cost optimization must align with performance strategy, not compete with it.

This blogpost examines the methods that have proven most effective as enterprises refine their multi-cloud strategies heading into 2026. From gaining accurate cost visibility to improving workload placement, automating governance, and forecasting at scale, the following sections outline the techniques that consistently support cloud cost optimization across complex environments. By applying these principles, organizations can build resilient and financially sustainable architectures that support rapid innovation without compromising control. These practices form the foundation of cloud cost optimization in a landscape where both technology and financial expectations are evolving faster than ever.

Cloud Cost Visibility in Multi-Cloud Environments

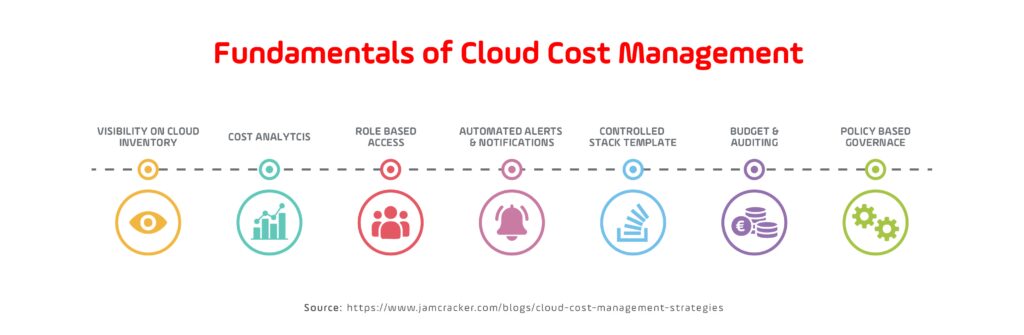

Achieving accurate visibility across AWS, Azure, and Google Cloud is one of the most critical prerequisites for effective multi-cloud operations. Without a unified financial view, organizations struggle to establish reliable attribution, understand consumption patterns, and anticipate costs across distributed environments. As platforms introduce new service tiers, AI-related pricing, and region-specific charges, the challenge intensifies. For this reason, mature visibility practices have become foundational to dependable cloud cost optimization, ensuring that financial decisions stay aligned with technical realities at scale.

Navigating Fragmented Billing Models Across AWS, Azure, and Google Cloud

Each provider publishes billing data in different formats, with distinct pricing units, discount structures, and metadata. This fragmentation makes it difficult to compare workloads or create a single source of truth. AWS expanded its cost and usage reporting capabilities through 2024 updates, while Azure and Google Cloud enhanced their analytics and export frameworks during the same period. Although these improvements are valuable, organizations still need cross-provider structures that translate raw data into a normalized framework that supports cloud cost optimization.

Building a Unified Cost Repository Through Normalized Data and Tagging Standards

Organizations that built consolidated cost warehouses in late 2024 and 2025 relied on automated ingestion pipelines to unify CUR files, Azure usage details, and Google Cloud billing exports. These systems normalize metrics such as usage type, region, service category, and discount application. However, normalization only works when combined with strong tagging and metadata standards. Inconsistent tagging is one of the leading causes of inaccurate attribution, and it directly interferes with cloud cost optimization by obscuring ownership and masking anomalies that would otherwise be visible.

Leveraging Provider-Native Exports and Analytics to Improve Financial Accuracy

Provider-native tools have evolved significantly, offering detailed recommendations and higher-resolution data. AWS provides event-level cost metadata; Azure enhances anomaly detection; Google Cloud includes rightsizing insights directly in billing exports. These capabilities help identify waste, detect cost spikes, and analyze long-term trends. When combined with normalized data, they form a reliable analytical foundation that materially improves forecasting and supports proactive cloud cost optimization rather than reactive cost control.

Operationalizing Visibility With Dashboards, Alerts, and Anomaly Detection

Centralized visibility is only useful when it becomes part of daily and weekly operational rhythms. Organizations that adopted real-time dashboards and automated alerts were able to identify unexpected consumption patterns, detect misconfigurations early, and respond to cost anomalies before they escalated. These workflows give engineering and finance teams a shared operational lens and enable cloud cost optimization to function as a continuous process instead of a sporadic review.

How Improved Visibility Directly Strengthens Cloud Cost Optimization Decisions

Visibility provides the context needed to evaluate rightsizing opportunities, determine optimal workload placement, and enforce governance policies. Without accurate data, decisions are either delayed or based on assumptions. With strong visibility practices, organizations can validate architectural changes, track team-level spending, and establish meaningful accountability. Growin supports these capabilities through advisory and implementation services, helping clients build normalized data pipelines, governance models, and operational reporting that reinforce scalable cloud cost optimization throughout the organization.

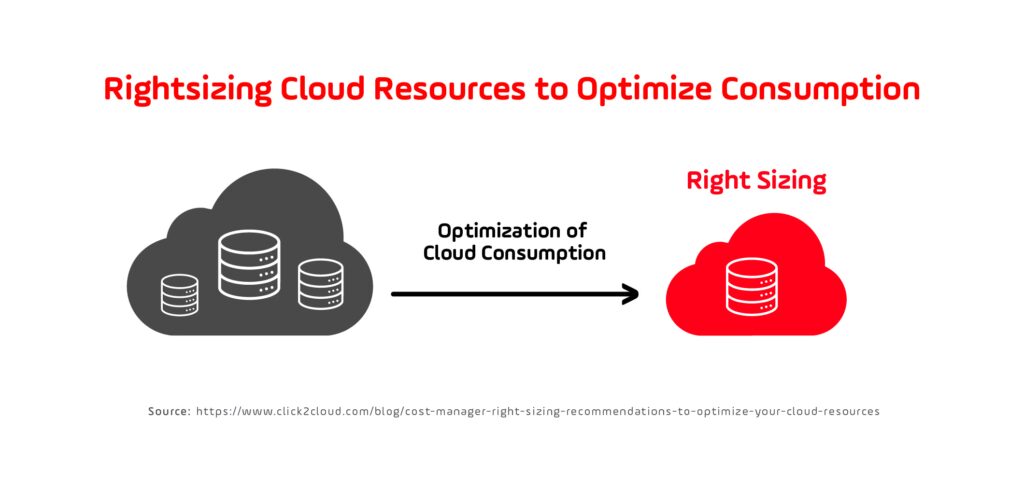

Rightsizing Compute and Storage at Scale

Many organizations discover that a significant portion of multi-cloud overspend comes from overprovisioned compute and storage resources that no longer match real usage patterns. As environments scale and teams deploy new workloads across AWS, Azure, and Google Cloud, the gap between allocated capacity and actual demand widens. This makes rightsizing one of the most consistent contributors to cloud cost optimization. Without structured rightsizing cycles, environments accumulate idle instances, oversized volumes, unnecessary snapshots, and inefficient storage tiers. As these patterns compound, the financial impact becomes substantial, especially in multi-cloud architectures where variations in pricing models can obscure resource inefficiencies.

Why Rightsizing Delivers High-Impact Efficiency Gains in Multi-Cloud Environments

Rightsizing is essential because it connects performance requirements to actual resource consumption. Across 2024 and 2025, all major cloud providers expanded recommendation engines that analyze compute and storage usage at increasing granularity. Azure Advisor’s 2025 updates, introduced improved visibility into underutilized VMs and storage tiers. AWS Compute Optimizer and Google Cloud Recommender delivered similar enhancements. These tools help organizations identify workloads that can move to smaller instance families, different storage classes, or more efficient configurations, driving repeatable cloud cost optimization outcomes across environments.

Compute and Storage Rightsizing Techniques Using Provider Recommendations

Compute rightsizing typically involves adjusting instance families, reducing vCPU counts, modifying memory configurations, or moving container workloads to more efficient resource limits. Storage rightsizing often includes downgrading volume performance tiers, applying lifecycle rules to cold data, deleting inactive snapshots, and shifting infrequently accessed data to archive layers. When these adjustments are applied consistently, cloud cost optimization becomes far more predictable. Real-world implementations in late 2024 showed that compute changes generated the fastest savings, while storage adjustments produced substantial long-term reductions in sustained usage costs.

Rightsizing for Containerized Workloads and Kubernetes Clusters

Kubernetes environments introduce additional complexity because autoscaling, request limits, and node configurations interact in ways that can inflate resource usage. Many organizations running multi-cloud Kubernetes platforms discovered that default container requests were significantly higher than actual consumption patterns. By analyzing utilization metrics and adjusting requests downward, teams reduced node counts and improved cluster efficiency. These adjustments directly support cloud cost optimization by preventing resource overprovisioning at the orchestrator level, where redundancy and scaling rules can magnify cost discrepancies.

Automation Workflows for Continuous Rightsizing Cycles

Manual rightsizing efforts are rarely sustainable in multi-cloud environments due to the number of workloads, frequency of deployments, and rate of configuration drift. Leading organizations automate discovery, analysis, and execution using scripts, policy engines, and infrastructure-as-code pipelines. This approach ensures that rightsizing happens regularly and consistently. When automation is integrated into operational workflows, cloud cost optimization becomes continuous rather than episodic. Automation also mitigates the risk of manual oversight and ensures that recommendations are applied before inefficiencies accumulate.

Risks and Tradeoffs When Adjusting Resource Configurations at Scale

Rightsizing is not without challenges. Over-aggressive reductions can impact performance, introduce latency, or cause resource contention. Workloads with unpredictable spikes may not align well with smaller configurations. Storage adjustments can produce access delays if data is incorrectly classified. These tradeoffs must be evaluated through benchmarks, testing, and usage modeling. Which is why organizations need to design rightsizing frameworks that balance performance and efficiency, reinforcing cloud cost optimization as a structured, data-driven practice rather than an isolated clean-up exercise.

Workload Placement Strategies: Matching Workloads to the Right Cloud

As multi-cloud architectures expand, workload placement has become one of the most important levers for improving performance and reducing unnecessary spend. Different cloud providers offer unique advantages in compute, storage, networking, and AI acceleration, making placement decisions a major driver of operational efficiency. For many organizations, misaligned workloads result in elevated consumption, increased data transfer costs, and inconsistent performance baselines. Because these issues scale quickly, workload placement is a central factor in cloud cost optimization, helping teams align technical requirements with financial outcomes.

Evaluating Price-Performance Differences Across Cloud Providers

Each major cloud platform continued refining its compute and storage offerings throughout 2024 and 2025, especially for AI and high-performance workloads. Google Cloud introduced A3 MegaCore GPU instances for advanced training workloads. AWS and Azure released updated instance families designed around specific performance characteristics such as memory density, vCPU efficiency, and throughput. Understanding these differences allows teams to map workloads to environments that deliver the best balance of cost and performance. This analysis directly supports cloud cost optimization by ensuring that resources operate under the conditions they were designed for.

Understanding Data Transfer, Inter-Region Costs, and Latency Implications

Workload placement also requires understanding how data moves through distributed systems. Multi-region architectures, cross-cloud data transfers, and hybrid connectivity models each influence cost profiles. Data-intensive workloads often incur substantial charges when moved between providers or regions, and latency-sensitive applications may require localized deployment. These considerations play a critical role in cloud cost optimization because misaligned placement can significantly inflate operational expenses even when compute pricing appears efficient.

Placement Strategies for AI, GPU, and High-Throughput Workloads

The rapid adoption of generative AI and large-scale analytics workflows in 2024 and 2025 made placement decisions increasingly specialized. GPU availability, queue times, price variability, and accelerator architecture all influence where AI workloads perform best. High-throughput analytics systems may require storage engines optimized for parallel processing or faster local SSDs. Matching these workloads to the right cloud environment enhances performance reliability and contributes to sustainable cloud cost optimization by preventing overspending on unsuitable infrastructure.

Benchmarking and Decision Frameworks for Selecting Optimal Environments

Organizations that perform regular benchmarking gain clear visibility into how workloads behave across clouds. Benchmarking includes throughput tests, latency measurements, cost-per-transaction comparisons, and scaling evaluations. Many teams adopted structured decision frameworks to ensure consistency in placement choices, often integrating performance telemetry and historical usage into their evaluations. These frameworks reinforce cloud cost optimization by allowing teams to make unbiased, data-driven decisions rather than relying on assumptions or legacy configurations.

Managing Tradeoffs Such as Vendor Dependence and Architectural Complexity

Effective workload placement involves balancing several tradeoffs. Consolidating certain workloads within a single cloud can reduce operational complexity but may increase dependence on proprietary services. Spreading workloads across multiple environments can improve resilience and avoid provider constraints but may introduce additional networking and orchestration challenges.

Automating Cloud Cost Optimization at Enterprise Scale

As cloud environments grow in scale and complexity, manual processes struggle to keep pace with the operational demands of multi-cloud ecosystems. Rightsizing, lifecycle management, anomaly detection, and policy enforcement all become more challenging when teams operate across multiple providers with differing tooling and pricing models. Automation has emerged as a critical enabler of cloud cost optimization because it ensures that efficiency practices are executed consistently, repeatedly, and without reliance on manual intervention. For organizations seeking predictable financial outcomes, automation provides structure and stability within environments that continuously evolve.

Moving From Manual Oversight to Preventive, Automated Controls

Manual reviews of resource consumption tend to occur sporadically and often focus on symptoms rather than root causes. In contrast, automated controls can prevent inefficient configurations from being deployed in the first place. Many organizations use predefined rules to block oversized instances, enforce tagging standards, or require cost estimates before provisioning new services. This shift toward preventive measures strengthens cloud cost optimization by ensuring that cost awareness is embedded directly into day-to-day engineering workflows.

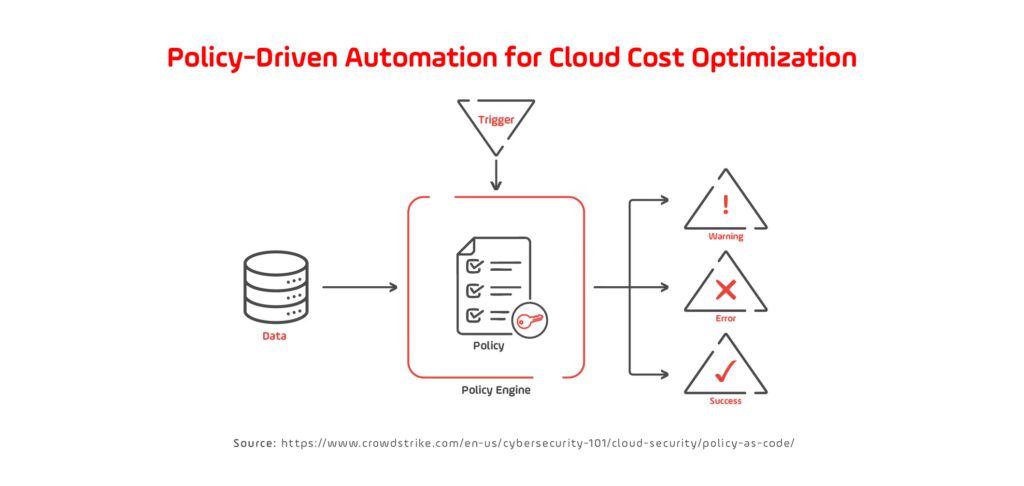

Using Infrastructure-as-Code and Policy-as-Code to Enforce Cost Rules

Automation at scale often depends on infrastructure-as-code and policy-as-code, which enable teams to codify cost-related rules across clouds. Azure Policy’s policy-as-code capabilities, demonstrate how organizations apply cost controls directly within deployment pipelines. AWS and Google Cloud offer similar mechanisms through their respective governance frameworks. These practices ensure that deviations are detected early and corrected automatically, making cloud cost optimization more reliable across distributed environments.

Automation Patterns for Elasticity, Shutdown Schedules, and Resource Lifecycle

Elasticity rules that scale resources up and down based on real-time demand help ensure that capacity matches usage patterns. Automated shutdown schedules remove unnecessary consumption from development and pre-production environments outside working hours. Storage lifecycle policies automatically transition data between tiers, reducing long-term costs. These automation patterns support continuous cloud cost optimization by aligning operational activity with actual consumption trends, reinforcing predictable spending across clouds.

Integrating Cost Signals Into Deployment Pipelines and Operations

Forward-thinking organizations integrate cost visibility directly into CI/CD pipelines and operational dashboards. This enables developers to understand cost implications before deploying changes and allows operations teams to detect inefficiencies as part of their standard monitoring routines. Integrating cost signals into existing workflows reinforces cloud cost optimization as a shared responsibility rather than an isolated function, ensuring that financial considerations inform every stage of the deployment lifecycle.

Monitoring Automation Outcomes to Avoid Performance or Reliability Issues

While automation introduces consistency, it must be monitored carefully to avoid unintended consequences such as resource undersizing or premature deprovisioning. Performance baselines, service requirements, and workload characteristics must remain central to any automated process, especially in environments where workload patterns shift rapidly. Organizations that validate automation outcomes regularly are better positioned to identify misconfigurations early, maintain stable performance, and adjust rules before they negatively impact operations. By treating automation as an iterative practice rather than a static configuration, teams ensure that cloud cost optimization continues to support both efficiency and reliability over time.

Multi-Cloud FinOps Governance

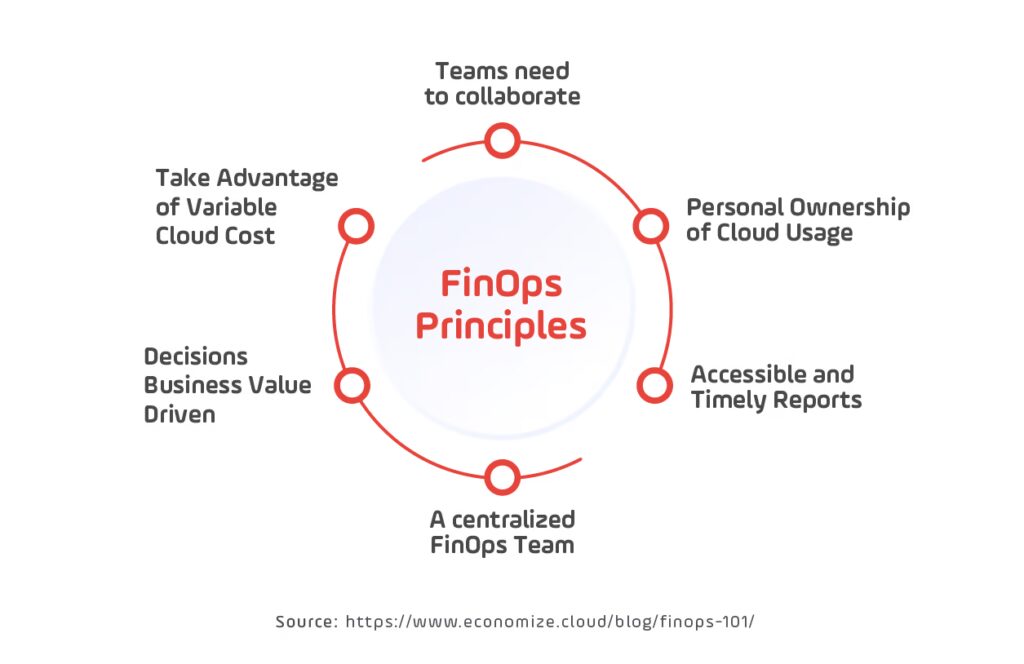

As organizations continue expanding their multi-cloud environments, governance becomes an essential foundation for financial accountability and operational clarity. Multi-cloud ecosystems introduce variability in pricing, resource taxonomies, and consumption patterns, making it difficult to maintain consistency without a structured governance model. FinOps practices have evolved significantly in 2025, emphasizing shared responsibility across engineering, finance, and product teams. This collaborative approach ensures that cloud cost optimization is not treated as a reactive cost-control exercise but as an active, continuous discipline that supports long-term business strategy.

Establishing Shared Accountability Across Engineering, Finance, and Product Teams

Effective governance begins with clearly defined ownership. Engineering teams understand service behavior, finance teams model budgets and forecasts, and product teams manage priorities and customer commitments. When these groups collaborate under a shared governance model, they create a unified framework for decision-making. This alignment prevents fragmented processes and ensures that cloud cost optimization is informed by both technical and financial realities. FinOps research reinforces the value of cross-functional collaboration in large, distributed organizations.

Standardizing Tagging, Labeling, Budgeting, and Forecasting Practices

Tagging and metadata standards are critical for accurate cost attribution. Without consistent labeling, multi-cloud environments quickly accumulate blind spots that make spend patterns difficult to analyze. Budgeting and forecasting practices also need to be standardized across teams and providers so that financial expectations remain aligned with actual usage. Organizations that adopt these practices can perform more reliable planning, strengthen accountability, and support sustained cloud cost optimization across all business units.

Governance Workflows and Review Cadences for Multi-Cloud Environments

Governance must be operationalized through predictable workflows and review cycles. Monthly financial reviews, weekly optimization discussions, and quarterly architectural assessments provide a structured rhythm for decision-making and continuous improvement. These cadences ensure that cloud cost optimization remains aligned with evolving workloads, platform updates, and business goals. As pricing models shift and new services emerge, regular governance reviews help organizations adapt quickly and maintain control over spend.

Tooling and Metrics That Support Transparent Financial Operations

Successful governance relies on clear, accessible metrics that allow teams to evaluate the impact of their decisions. Dashboards that combine utilization data, anomaly alerts, budget tracking, and forecast accuracy give organizations the insight needed to make informed decisions. Centralizing these tools across AWS, Azure, and Google Cloud environments reinforces cloud cost optimization by enabling teams to identify inefficiencies early, compare performance across clouds, and monitor the outcomes of optimization initiatives over time.

Common Barriers to Governance and How to Prevent Fragmentation

Many organizations struggle with governance due to inconsistent adoption, unclear ownership, or resistance to process changes. Without clear incentives and effective communication, governance frameworks lose momentum, leading to fragmented decision-making and unpredictable costs. Overcoming these challenges requires strong leadership support, transparent expectations, and processes that integrate naturally into day-to-day engineering and financial workflows. When governance becomes part of routine operational practice, organizations maintain alignment more easily, reduce financial exposure, and reinforce long-term cloud cost optimization across teams and business units.

Real-World Multi-Cloud Cost Optimization Case Studies

Real-world implementations across these past years show that consistent, measurable results in multi-cloud environments come from organizations that combine visibility, rightsizing, automation, and governance into a continuous operating model. These case studies illustrate how different industries approached cloud cost optimization at scale, what challenges they encountered, and which practices delivered the strongest financial and operational impact. By analyzing their experiences, it becomes clear which strategies transfer effectively across diverse architectures.

Defining the Organization’s Multi-Cloud Context and Baseline Challenges

IBM’s enterprise FinOps initiative provides one example of an organization attempting to unify spend visibility across multiple clouds. Their published insights describe early struggles with decentralized ownership, inconsistent tagging, and limited transparency into cross-cloud spending trends. Establishing a common cost taxonomy helped teams align reporting and created the foundation for sustained cloud cost optimization by ensuring that teams could reliably compare consumption across AWS, Azure, and Google Cloud.

Visibility, Rightsizing, and Placement Improvements That Delivered Outcomes

Several organizations demonstrated how early visibility improvements unlock deeper optimization opportunities. Snap’s experience on Google Cloud, highlights how detailed analytics enabled more accurate workload placement and lifecycle tuning. VMware’s migration program on AWS showed that structured rightsizing of compute and storage reduced excess capacity while improving performance stability. Both studies reinforce that visibility and placement decisions directly influence cloud cost optimization outcomes across large, distributed environments.

Implementing Automation and Governance at Scale

Shell’s multi-cloud governance initiative on Azure illustrates the value of structured controls when operating globally distributed workloads. Their case study shows how policy enforcement, automated guardrails, and standardized tagging helped unify operational decision-making. As automation reduced manual intervention, teams were able to shift more attention to long-term planning and evaluation. These improvements demonstrate how governance and automation operate together to support continuous cloud cost optimization in enterprise settings.

Key Before-and-After Metrics Tied to Financial and Operational Gains

Organizations that combined observability with automation often reported significant before-and-after improvements. Datadog’s multi-cloud cost observability report details reductions in idle resources and improved anomaly detection achieved through centralized monitoring. Adobe’s cloud efficiency initiative, highlights how workload rightsizing and better placement decisions supported predictable capacity planning. These cases show how structured measurement and iterative reviews strengthen cloud cost optimization over time.

Transferable Lessons Relevant to Other Multi-Cloud Organizations

Across industries, several lessons emerge consistently in mature multi-cloud environments. Teams that invest early in visibility, tagging standards, and shared financial ownership progress more quickly through ongoing optimization cycles. Organizations that adopt continuous review rhythms rather than sporadic cost cutting also tend to sustain cloud cost optimization over time.

The FinOps Foundation’s library of published resources provides real case studies and reports that show how structured governance, clear cost ownership, and defined review cadences deliver measurable improvements in spend visibility and control. Engineering teams at companies like Netflix have shared their approaches to cost control and architectural optimization in posts accessible via their blog, where discussions of reserved capacity, autoscaling rules, and cost telemetry illustrate how cloud cost optimization can scale across large distributed systems. Similarly, published engineering insights from Pinterest’s tech blog on tagging and cost allocation underline the importance of consistent metadata and accountability as drivers of sustainable optimization.

Building Sustainable Multi-Cloud Efficiency Through Continuous Optimization

As multi-cloud ecosystems expand and diversify, organizations increasingly recognize that sustained efficiency depends on more than tactical adjustments or one-time cost reduction efforts. Effective cloud cost optimization requires a continuous, structured approach that integrates visibility, rightsizing, workload placement, automation, and governance into day-to-day operations. Each of these practices strengthens the others, forming a repeatable operating model that adapts as platforms evolve and business demands shift.

The organizations that achieve the highest impact are those that treat cost management as an operational discipline rather than an afterthought. They invest in shared accountability across teams, maintain transparent metrics, automate decisions where possible, and review assumptions regularly. These behaviors create the resilience and clarity needed to operate confidently across multiple cloud providers, even as pricing models, services, and performance expectations continue to change.

Ultimately, multi-cloud success depends on the ability to balance flexibility with control. When teams establish consistent processes, adopt real-time insights, and strengthen cross-functional alignment, cloud cost optimization becomes a natural outcome of healthy engineering and financial practices. The result is a long-term foundation for predictable spending, improved system performance, and strategic decision-making grounded in reliable data.