Definition and Core Concepts

Event driven architecture is a software design paradigm in which components of a system communicate via events. An event is a signal that something has changed, such as a purchase being completed, a sensor recording a new measurement, or a user profile being updated.

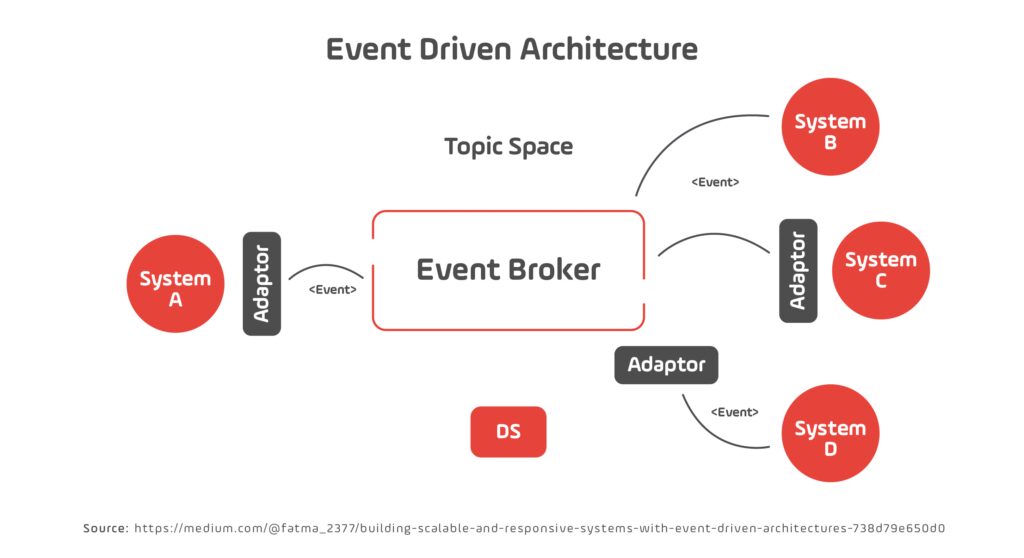

In this model:

- Producers generate events whenever a meaningful change occurs.

- Event brokers or messaging platforms capture, buffer, and distribute these events.

- Consumers subscribe to events and process them asynchronously.

The main advantage is decoupling. Producers do not need to know who consumes their events, and consumers can scale independently. This makes event driven architecture especially powerful for scaling systems across distributed environments.

Why Event Driven Architecture Matters in 2025

The context for software development in 2025 makes event driven architecture more relevant than ever:

- Volume and speed of data: Organizations now process billions of daily events. Batch systems or synchronous APIs cannot keep up.

- Microservices and distributed systems: Companies break monoliths into services managed by separate teams. Decoupled event flows reduce bottlenecks.

- Resilience and reliability: By buffering events and retrying delivery, event driven architecture avoids cascading failures when one service goes down.

- Real-time user expectations: Users expect dashboards, chat, and transaction updates to appear within milliseconds.

- Cloud and serverless platforms: Services like AWS EventBridge, Google Pub/Sub, and Azure Event Grid make event driven design cost-effective and easy to scale.

Real-World Examples

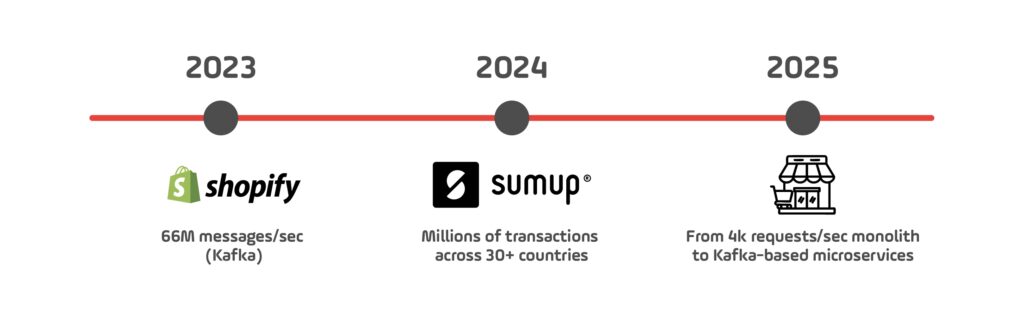

A clear demonstration of the value of event driven architecture comes from Shopify. In 2023, engineers reported that Apache Kafka is at the core of Shopify’s architecture, enabling it to handle up to 66 million messages per second across systems like checkout, payments, and inventory. By adopting event driven architecture, Shopify can scale systems independently and keep services responsive even during traffic peaks such as Black Friday.

Another example comes from SumUp, a global financial services company. In 2025, SumUp shared how they use Apache Kafka via Confluent Cloud to process millions of payment events daily across more than 30 countries. Their event driven architecture allows them to scale systems reliably during transaction spikes while maintaining compliance in regulated markets. The company also highlighted faster developer delivery cycles because teams can consume event data directly as a service.

There are also migration stories from traditional retail. In August 2025, DZone published a case study of a large e-commerce company that moved from a monolith serving over 4,000 requests per second to a microservices architecture underpinned by Apache Kafka. This shift decoupled the system’s main components, improved throughput, and reduced dependencies between teams. Event driven architecture made it possible to scale systems without rewriting every service at once.

How Event Driven Architecture Supports Scaling Systems

Event driven architecture contributes to scaling systems in several practical ways:

- Services can scale independently because they are decoupled.

- Brokers buffer and distribute events, smoothing out spikes in demand.

- Events can be partitioned, allowing consumers to process them in parallel across multiple nodes.

- Event logs make it possible to replay events for recovery or auditing, improving resilience.

- Teams can work autonomously because producers and consumers only agree on event schemas, not on direct integrations.

Trade-Offs and Limitations

Adopting event driven architecture is not without challenges.

- Increased complexity in design and debugging.

- Ordering guarantees and delivery semantics (exactly-once vs at-least-once) can introduce overhead.

- Event schemas need governance to avoid breaking downstream consumers.

- Observability becomes harder in asynchronous systems, making monitoring and tracing essential.

- Eventual consistency may create temporary mismatches across services, which requires careful communication with stakeholders.

Key Components and Tools/Platforms

Event Brokers: The Backbone of Event Driven Architecture

At the center of any event driven architecture is an event broker. This is the system responsible for ingesting events from producers, persisting them if needed, and distributing them to multiple consumers. Brokers are the backbone that makes decoupling possible and allow scaling systems without creating direct dependencies between services.

Apache Kafka

Kafka is the most widely adopted event streaming platform. It provides log-based storage, partitioning for parallelism, and replication for resilience. By design, it supports high throughput and replayability, which makes it ideal for scaling systems that process millions of events per second.

Apache Pulsar

Pulsar has been gaining traction in large enterprises because of its support for multi-tenancy, geo-replication, and unified handling of both queues and streams. Its tiered storage allows long-term event retention, which is critical when scaling systems that must replay historical data.

AWS EventBridge

EventBridge is Amazon’s fully managed event bus. It is tightly integrated with AWS services and SaaS providers, which makes it attractive for organizations that want to implement event driven architecture without managing infrastructure. For scaling systems in cloud-native and serverless contexts, EventBridge is one of the fastest options to adopt.

Google Cloud Pub/Sub

Pub/Sub is Google’s global messaging service, built for low-latency delivery at scale. It powers pipelines in analytics and IoT workloads and is often used when scaling systems requires seamless global reach with minimal operational overhead.

Schema Management and Governance

Scaling systems with event driven architecture depends on schema management. Producers and consumers must agree on event formats to avoid breaking compatibility. Without governance, large event ecosystems become brittle.

- Confluent Schema Registry enforces compatibility rules for Avro, JSON, and Protobuf schemas. This ensures that consumers can continue processing events even as producers evolve.

- AsyncAPI Initiative provides a standard way to document asynchronous APIs. Much like OpenAPI standardized REST, AsyncAPI brings consistency to event driven architecture.

- EventCatalog helps teams build centralized catalogs of events, schemas, and ownership. This improves discoverability and governance as systems scale.

Processing Frameworks

Event brokers deliver messages, but many use cases require real-time processing: enriching, aggregating, or analyzing events as they arrive. Frameworks bring this capability to event driven architecture and make scaling systems more powerful.

- Apache Flink: Provides stateful stream processing with event time semantics. Used by companies like Uber, Alibaba, and Netflix to power real-time pipelines.

- Kafka Streams: A lightweight Java library for processing streams directly in microservices. It simplifies enriching or joining data without running a separate cluster.

- Apache Beam: Offers a unified model for batch and streaming. Its portability across engines like Flink, Spark, and Google Dataflow makes it attractive for hybrid event driven architectures.

Observability and Monitoring Tools

As event driven systems grow, observability becomes a scaling challenge. Without proper monitoring, it is impossible to guarantee reliability.

- OpenTelemetry standardizes distributed tracing and metrics across asynchronous systems.

- Datadog and New Relic provide native Kafka and Pulsar integrations for tracking throughput, lag, and consumer health.

- Prometheus and Grafana are widely used to visualize consumer lag, broker latency, and throughput in real time.

Real-World Examples (2023–2025)

In 2025, Shopify shared its internal architecture in the article Shopify Tech Stack on ByteByteGo. Kafka serves as the backbone for its messaging layer, enabling real-time pipelines for search, analytics, and inventory workflows. Shopify reports handling about 66 million messages per second during peak traffic, made possible by its event driven architecture.

Uber in 2024 described its approach in How Uber Manages Petabytes of Real Time Data on ByteByteGo. Uber relies on Kafka and Apache Flink to process streams of events across pricing, fraud detection, and trip monitoring. Its event driven architecture allows Uber to scale systems globally while maintaining real-time responsiveness.

Also in 2024, Uber Freight presented its shift from batch processing to streaming analytics at Confluent’s Current conference. The new platform reduced aggregation latency from 15 minutes to seconds, which dramatically improved responsiveness for logistics operations. This is a practical demonstration of event driven architecture enabling scaling systems in a mission-critical business.

The key enablers of event driven architecture are event brokers, schema governance, real-time processing frameworks, and observability tools. These components make scaling systems reliable, resilient, and adaptable in 2025. Real-world examples from Shopify and Uber confirm that these tools can handle billions of events per day at global scale.

Patterns and Strategies for Scaling Systems with Event Driven Architecture

Domain-Driven Design and Bounded Contexts

Event driven architecture works best when paired with domain-driven design (DDD). In this model, systems are divided into bounded contexts, each responsible for its own domain logic and data. Instead of tightly coupling services with direct calls, each context publishes events describing significant changes.

This design allows teams to build and deploy independently while preserving a consistent flow of information across the system. For example, in an e-commerce platform, the “Order” context publishes an OrderCreated event, the “Inventory” context consumes it to update stock levels, and the “Billing” context reacts by charging the customer.

The result is that event driven architecture enables scaling systems without entangling teams or services. Companies like Amazon and Shopify rely on bounded contexts to keep thousands of engineers working in parallel without introducing bottlenecks.

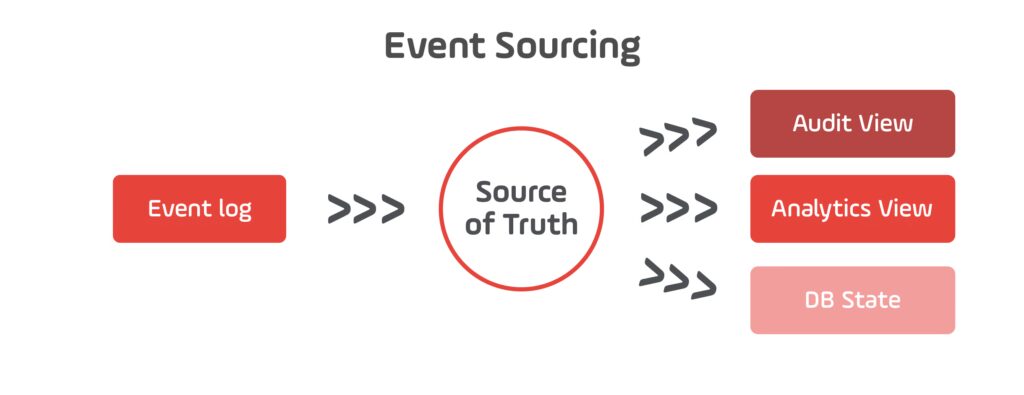

Event Sourcing: Capturing State Through Events

Event sourcing extends event driven architecture by treating events as the source of truth. Instead of only storing the current state in a database, every state change is recorded as an event in an immutable log.

Advantages for scaling systems include:

- Replayability: If a projection fails, it can be rebuilt from the event log.

- Auditability: Every state change is tracked, critical in finance, healthcare, and compliance-heavy industries.

- Multiple views: Different services can derive their own views from the same event history.

A recent case is EventStoreDB, which has seen adoption in industries such as online gaming and fintech since 2023. By storing every event, organizations can scale systems with confidence that no information is lost.

CQRS: Optimizing Reads and Writes

CQRS (Command Query Responsibility Segregation) is often used alongside event sourcing. In this pattern, the write side handles commands that produce events, while the read side maintains separate models optimized for queries.

When implemented in event driven architecture, CQRS improves performance and scalability by letting each side evolve independently. For example, Netflix employs real-time event pipelines: playback events (buffering, start/stop, quality changes) are streamed into telemetry systems to monitor service health, while the same event streams feed personalization systems that update recommendations in near real time.

Partitioning and Sharding

Partitioning is fundamental to scaling systems in event driven architecture. Event brokers like Kafka and Pulsar divide topics into partitions. Each partition is processed by consumer groups, enabling horizontal scalability.

Partitioning ensures that event driven architecture can scale to billions of events daily. However, it requires thoughtful design:

- Partition by entity key (e.g., user ID) to preserve order.

- Spread partitions evenly to maximize throughput.

- Monitor consumer lag to prevent bottlenecks.

LinkedIn, the birthplace of Kafka, continues to rely on this strategy. In 2024, LinkedIn Engineering highlighted how design patterns such as partitioning and sharding power its feeds, messaging, and ads infrastructure at internet scale.

Backpressure and Flow Control

Event driven architecture must handle imbalances between producers and consumers. If producers generate events faster than consumers can process them, the system risks collapse.

Backpressure strategies for scaling systems include:

- Buffering in brokers: Kafka holds messages until consumers catch up.

- Rate limiting: Consumers can apply throttling to avoid overload.

- Dead-letter queues (DLQs): Unprocessable events are moved aside, preventing them from blocking the stream.

These strategies ensure that event driven architecture remains resilient even under unpredictable traffic spikes, such as flash sales or viral social media campaigns.

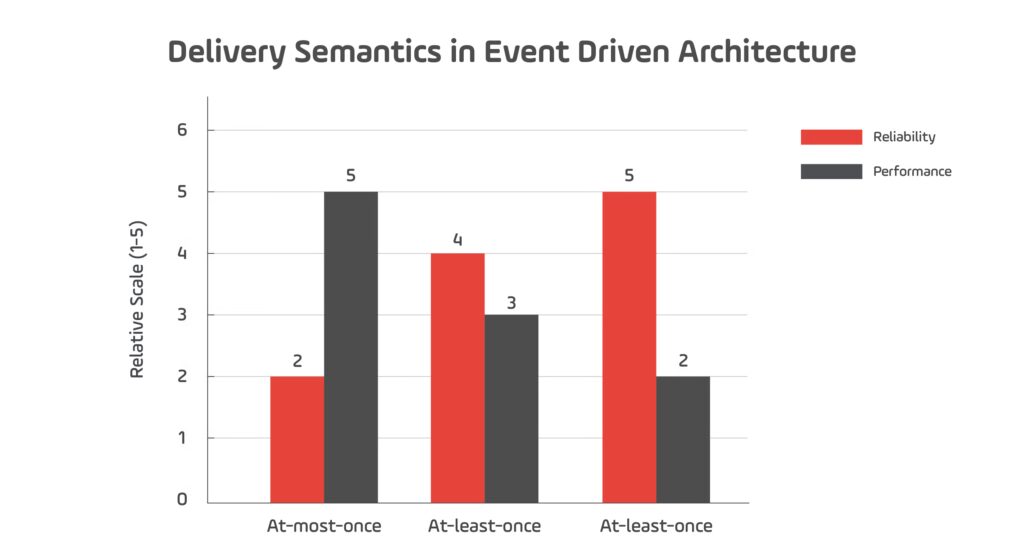

Exactly-Once Semantics

A growing trend since 2023 is the push for exactly-once processing semantics in event driven architecture. While “at-least-once” delivery is simpler, it risks duplicate events, and “at-most-once” risks data loss.

Technologies like Kafka’s idempotent producers and transactional guarantees, along with frameworks such as Flink, are making exactly-once semantics feasible at scale. This matters for scaling systems in finance, healthcare, and e-commerce, where duplicates or losses are unacceptable.

Uber’s streaming platform, for example, uses Flink with exactly-once guarantees for fraud detection and trip pricing, ensuring accuracy across billions of daily events.

Polyglot Persistence and Event-Carried State Transfer

Another strategy in event driven architecture is event-carried state transfer, where events contain enough data for consumers to act without querying the producer. This reduces synchronous dependencies and accelerates scaling systems.

Combined with polyglot persistence (different services storing their own specialized databases), this ensures each service can scale independently. For instance, Slack uses Kafka to propagate message events that carry all the necessary metadata for delivery and indexing, eliminating the need for direct lookups.

Trade-Offs of Advanced Patterns

While patterns like event sourcing, CQRS, partitioning, and exactly-once semantics empower event driven architecture, they bring challenges:

- Complexity: Debugging asynchronous flows is harder.

- Operational overhead: Multiple read models, partition balancing, and schema governance require discipline.

- Consistency models: Eventual consistency is powerful but must be communicated to users and business stakeholders.

Organizations adopting event driven architecture must weigh these trade-offs carefully. For global platforms, the benefits in resilience and scalability usually outweigh the costs.

Real-World Examples (2023–2025)

- LinkedIn: Uses partitioning and sharding in Kafka to process trillions of events daily. Event driven architecture underpins its real-time feed and ads infrastructure.

- Slack: Described in 2025 how its platform supports billions of daily messages with Kafka and Redis. Event driven architecture decouples message delivery and indexing, keeping performance stable under massive load.

- Netflix: Continues to refine its streaming platform with event sourcing, CQRS, and polyglot persistence. Event driven architecture allows Netflix to handle personalization and monitoring for over 260 million subscribers.

- Uber: In 2024, Uber explained how it manages petabytes of real-time data with Kafka and Flink, including exactly-once semantics to ensure accurate pricing and fraud detection.

Event driven architecture is more than a messaging style — it is a set of proven patterns that support global-scale systems. Domain-driven design, event sourcing, CQRS, partitioning, backpressure, and exactly-once semantics all enable resilience, flexibility, and high throughput. Real-world platforms like LinkedIn, Slack, Netflix, and Uber prove that event driven architecture is the backbone of scaling systems in 2025.

Trade-Offs, Limitations, and Common Pitfalls

Complexity in Design and Operations

Event-driven architectures add operational complexity because systems must coordinate across many asynchronous event flows. Troubleshooting a single business transaction may span dozens of events traversing multiple services. Achieving visibility across those flows requires investment in observability, tracing, and monitoring instrumentation.

For instance, Spotify’s event delivery infrastructure ingests immense scale (historically measuring up to 8 million events per second, or ~500 billion events daily) and routes them through distributed pipelines. To support resilience and debugging at this scale, engineering teams at Spotify emphasize observability and pipeline health monitoring tools such as latency dashboards, error tracking, and metrics collection. In their earlier “Reliable Event Delivery System” talk, Spotify also shared using tools like Kibana, PagerDuty, and SLA-alerting to respond to anomalies in event pipelines.

Because tracing long asynchronous chains across services is nontrivial, teams often adopt correlation IDs, distributed tracing frameworks (e.g. OpenTelemetry, Jaeger), and visualization tools to tie together logs, metrics, and traces. These investments are crucial for diagnosing performance bottlenecks, root cause failures, or cascading delays.

Eventual Consistency and Data Accuracy

In event driven systems, services often become consistent only after some delay. This works well for analytics and notifications but can be problematic for domains requiring immediate accuracy.

Goldman Sachs shared in 2023 how its event driven architecture for trading platforms had to balance eventual consistency with strict financial accuracy. They built compensating mechanisms to ensure trades were correctly reflected across services within seconds, while still benefiting from the scalability of asynchronous processing.

Event Ordering and Delivery Guarantees

Event brokers generally preserve order within partitions but not globally. This makes delivery guarantees a critical trade-off: at-most-once risks data loss, at-least-once risks duplicates, and exactly-once adds cost and complexity.

eBay engineers explained in 2024 how they tackled ordering issues while processing billions of marketplace events daily. They use idempotency keys and partitioning strategies to avoid duplicate processing while keeping throughput high.

Schema Evolution Risks

Events change as systems evolve. Without schema governance, one producer change can break dozens of consumers.

In 2025, ING Bank published a case study on adopting schema registries to manage evolution across thousands of event types. They found that enforcing backward compatibility was essential to keep their payments platform stable as it scaled across multiple countries.

Increased Infrastructure and Operational Costs

Event driven architecture requires significant infrastructure: brokers, observability pipelines, schema registries, and replay storage. While managed services exist, costs rise quickly with throughput and retention.

Pinterest highlighted this in a 2024 article on scaling its event pipeline. By migrating to a tiered storage model, they reduced Kafka cluster costs by 60% while maintaining the ability to replay events. Even so, they noted that storage and observability remained a major budget line item.

Cultural and Organizational Pitfalls

Finally, adopting event driven architecture is not only a technical challenge. Teams must adapt to asynchronous thinking and treat events as long-term contracts. Without discipline, organizations end up with duplicated events, unclear ownership, and brittle integrations.

In 2023, BBC iPlayer shared how it restructured engineering teams to align with event boundaries rather than application silos. This cultural change reduced duplicated work and allowed teams to scale their streaming platform more effectively.n 2025, event driven architecture is the backbone of modern infrastructure, enabling real-time, scalable, and resilient systems across industries.

Event driven architecture is powerful but comes with real-world limitations. Complexity in debugging, eventual consistency, ordering, schema evolution, infrastructure cost, and organizational alignment all pose risks. Companies like Spotify, Goldman Sachs, eBay, ING, Pinterest, and the BBC illustrate how these pitfalls manifest in practice — and how thoughtful design, governance, and culture are needed to make event driven architecture sustainable at scale.

Tooling, Platforms, and Ecosystem in 2025

Cloud-Native Event Brokers

In 2025, cloud providers are competing heavily in the event broker space. Managed services lower the barrier to entry and make event driven architecture easier to adopt at scale.

- AWS EventBridge: Offers tight integration with AWS Lambda, S3, and Step Functions. It supports rule-based routing, schema discovery, and SaaS integrations.

- Google Pub/Sub: Provides global low-latency messaging with autoscaling. It has become a default choice for IoT and analytics pipelines due to its ease of scaling systems.

- Azure Event Hubs: Microsoft’s streaming platform supports real-time ingestion from IoT, telemetry, and business events. It integrates with Azure Stream Analytics and Power BI for visualization.

Open-Source Ecosystem

The open-source ecosystem for event driven architecture remains vibrant in 2025, with tools filling specialized niches.

- Apache Kafka: Still the most widely used, with the ecosystem expanding through Confluent’s platform and new open-source tooling. Kafka now supports tiered storage and improved exactly-once semantics.

- Apache Pulsar: Popular for multi-tenancy and geo-replication. Pulsar’s ecosystem now includes Pulsar Functions for lightweight processing and BookKeeper for durable storage.

- NATS: Lightweight messaging system focused on simplicity and performance. In 2025, NATS JetStream has been adopted for edge computing and IoT workloads.

Event Processing Frameworks

Frameworks for stream processing continue to mature, making event driven architecture more accessible for developers.

- Apache Flink: Supports batch and stream processing with advanced stateful computations. Flink 2.0, released in 2024, added new APIs for machine learning inference over streams.

- Kafka Streams: Embedded processing framework for microservices that consume directly from Kafka. Its lightweight approach makes it ideal for scaling systems with minimal overhead.

- Apache Beam: Provides a unified API for batch and streaming. With strong support from Google Dataflow, Beam is now widely used in multi-cloud strategies.

Observability and Governance Platforms

As event driven architecture grows, monitoring and governance have become a priority.

- Confluent Control Center: Offers metrics, lag monitoring, and schema management for Kafka-based systems.

- Datadog & New Relic: Both expanded their integrations in 2024 to cover Kafka, Pulsar, and EventBridge. These platforms provide visibility into consumer lag, throughput, and error rates.

- AsyncAPI: Now considered the industry standard for documenting event-driven APIs, making integration smoother across teams and partners.

Developer Experience and Low-Code/No-Code Tools

A major shift in 2025 is the rise of developer productivity platforms. Event driven architecture adoption used to require deep Kafka or Pulsar expertise. Today, companies can start faster thanks to abstraction layers.

- Temporal.io: Provides workflow orchestration that abstracts away retries, backpressure, and timeouts while integrating with Kafka and cloud brokers.

- Zapier and n8n.io: While traditionally used for SaaS automation, both have expanded to support event-driven workflows across enterprise systems. These tools help non-engineers adopt event driven architecture for business processes.

Security and Compliance Tools

As event driven architecture spreads into regulated industries, security and compliance tooling is becoming critical.

- AWS Audit Manager for EventBridge: Helps organizations document compliance with GDPR and HIPAA when using serverless events.

- Confluent Cluster Linking with RBAC: Introduced in 2023, it allows global Kafka clusters to enforce role-based access control, ensuring event data privacy at scale.

- OPA (Open Policy Agent): Increasingly embedded into event-driven pipelines to enforce fine-grained access control on event consumption.

AI and Machine Learning Integration

In 2025, event driven architecture is powering real-time AI pipelines. Rather than batch training and inference, events trigger model updates and predictions instantly.

- Databricks: Introduced streaming feature stores in 2024, letting ML systems consume events directly to update models in near real time.

- Flink ML: Flink 2.0 added APIs to integrate inference into streaming jobs, making event driven architecture a backbone for online recommendation engines.

- Microsoft Fabric: Combines event ingestion, ML training, and Power BI dashboards in one platform, targeted at real-time business intelligence.

Real-World Examples (2023–2025)

- Pinterest: In 2024, Pinterest described how its ML infrastructure evolved toward streaming architectures to support more responsive ad conversion models.

- ING Bank: Highlighted in EDA case studies as adopting event-driven systems to govern complexity and scale across European financial platforms.

- Cloudflare: Shared how its “Building Cloudflare on Cloudflare” initiative underpins its modular, distributed infrastructure—showing how event-driven systems can scale at a global edge.

- NATS: Documented as a lightweight, high-performance messaging broker designed for cloud-native event routing, offering an alternative to heavier systems like Kafka.

The ecosystem for event driven architecture in 2025 is broad and rapidly evolving. Cloud-native brokers, open-source projects, developer productivity platforms, compliance tooling, and AI integrations are reshaping how companies adopt event driven architecture. Case studies from Pinterest, ING Bank, Cloudflare, and Databricks show how this ecosystem enables organizations to balance cost, governance, intelligence, and global scalability.

Closing the Loop: Event Driven Architecture’s Role in Modern Infrastructure

Recap of Key Insights

Event driven architecture has evolved from a niche pattern to a mainstream foundation for scaling systems in 2025. Across industries — from e-commerce and finance to logistics, media, and AI — organizations are proving that event driven architecture delivers:

- Decoupling: Producers and consumers evolve independently.

- Scalability: Partitioning, sharding, and brokers enable global-scale throughput.

- Resilience: Event sourcing and CQRS provide replayability, recovery, and flexibility.

- Real-time intelligence: Integration with machine learning and analytics allows instant insights.

At the same time, we’ve seen that event driven architecture comes with trade-offs: complexity in debugging, eventual consistency, schema evolution risks, and infrastructure costs. The success stories from Shopify, Uber Freight, ING, Cloudflare, Databricks, and BBC iPlayer show that with the right governance, these challenges can be managed effectively.

Strategic Next Steps for Organizations

- Start Small, Scale Gradually

Begin with one bounded context — such as payments or notifications — and evolve it into event driven architecture before scaling systems across the enterprise. - Invest in Governance Early

Adopt schema registries, AsyncAPI documentation, and strong ownership models from the start. This avoids brittle integrations and accelerates team velocity later. - Prioritize Observability

Build in tracing, logging, and metrics for every service in the pipeline. Without observability, debugging asynchronous event chains becomes impossible at scale. - Match Tooling to Context

Choose the right broker and framework for your use case. Kafka may be ideal for global throughput, while NATS or EventBridge may better fit lightweight or serverless workloads. - Leverage AI and Analytics

Integrate real-time pipelines with machine learning and analytics platforms. This transforms event driven architecture from infrastructure plumbing into a driver of business value.

Why 2025 Is the Year of Event Driven Architecture

With the convergence of cloud-native brokers, open-source maturity, governance frameworks, and real-world success stories, 2025 represents the tipping point. Event driven architecture is no longer experimental — it is the backbone of modern digital infrastructure.

Organizations that embrace it strategically will unlock real-time customer experiences, operational agility, and intelligent decision-making. Those that don’t, risk being left behind by competitors who can scale systems with speed and precision. This is why collaborating with the right partners is so important. If you’re ready to take the leap, contact us and we’ll start right away.

Suggested Next Steps for Readers

If you’re considering event driven architecture for your organization in 2025:

- Audit your current architecture: Identify synchronous bottlenecks where events could decouple systems.

- Explore case studies: Learn from pioneers like Shopify, Uber Freight, and ING Bank.

- Pilot with real events: Build a small pipeline using Kafka, Pub/Sub, or EventBridge to test viability.

- Engage with experts: Partner with consultancies or technology providers who specialize in scaling systems through event driven architecture.